[AINews] Francois Chollet launches $1m ARC Prize • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

Interconnects (Nathan Lambert)

OpenInterpreter Discord

LLM Finetuning Discussions

LLM Finetuning (Hamel + Dan) ▷ #fireworks

Unsloth AI Discussions

Challenges with Deep Model Integration and Influence Functions

Perplexity AI - LM Studio Discussions

HuggingFace LM Studio Models and Discussion

HuggingFace Announcements and Discussions

Interconnects (Nathan Lambert) - News

OpenAccess AI Collective - General Help

llamafile Conversation

AI Twitter Recap

AI Twitter Recap

Claude 3 Opus provides AI Twitter recaps, mentioning their work on clustering and flow engineering with Haiku.

Apple Integrates ChatGPT into iOS, iPadOS, and macOS

- Apple partners with OpenAI to integrate ChatGPT into their devices, allowing AI-powered features like document summarization and photo analysis.

- Concerns about privacy were raised despite assurances of user data protection.

Reactions to Apple's WWDC AI Announcements

- Mixed reactions to Apple's AI integration, with some questioning Apple's capability to ship AI independently.

- Comparison to other models shows Apple's on-device models perform well but server-side models lag behind GPT-4.

AI Discord Recap

The AI Discord Recap features updates on various AI-related discussions and developments in different Discord channels. This section covers anticipated enhancements in AI models, conversations around model compression and optimization strategies, benchmarks for affordable AWS instances, advancements in stable diffusion models, and insights on AI challenges and model deployment. Discussions on real-world ML applications, innovative AI tools like MARS5 TTS, and debates on AI expectations and capabilities are also highlighted. Memes and humor sections add a touch of light-heartedness to the technical discussions.

Interconnects (Nathan Lambert)

Engineers mixed in their feedback on OpenAI's collaboration with Apple, suggesting the integration into Apple Intelligence may be superficial; however, user privacy highlighted in the official announcement, despite rumor and skepticism. Comparative benchmarks for Apple's on-device and server models aroused curiosity about their performance against peers.

Apple's strategic approach to separate Apple Intelligence from Siri has sparked dialogue on potential impacts on user adoption and perceptions of the new system's capabilities.

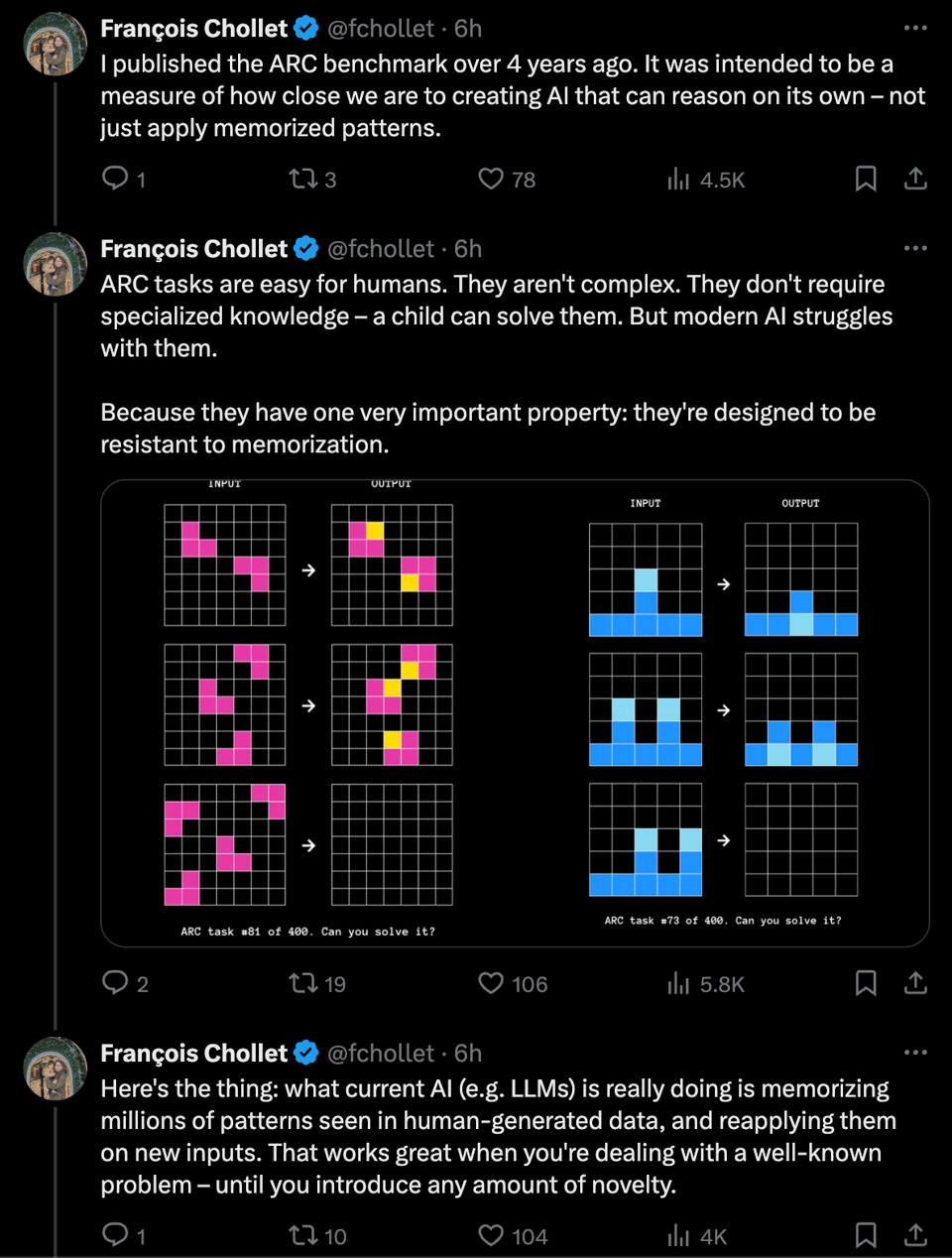

The forthcoming interview of François Chollet by **D

OpenInterpreter Discord

Apple Intelligence on the AI Radar

Community showed interest in the potential integration of Open Interpreter with Apple's privacy-centric AI capabilities outlined on the Apple Intelligence page. This could lead to leveraging the developer API to enhance AI functionalities across Apple devices.

SB 1047 in the Line of Fire

Dan Jeffries criticized the California AI Control and Centralization Bill (SB 1047), introduced by Dan Hendyrcks, for its centralized control over AI and the threat it poses to open source AI innovation.

Arduino IDE Complications on Mac M1 Resolved

An issue with Arduino IDE on Mac M1 chips was addressed through a fix found in a GitHub pull request, but led to additional problems with the Wi-Fi setup on device restarts.

Linux as an Open Interpreter Haven

Debate among members highlighted consideration of prioritizing Linux for future Open Interpreter developments, aiming to provide AI-assisted tools independent of major operating systems like Apple and Microsoft.

Personal Assistant that Remembers

Work on enhancing Open Interpreter with a skilled prompting system that can store, search, and retrieve information like a personal assistant was shared, spotlighting innovation in creating memory retention for AI systems.

Killian's Insights Captured

A noteworthy discussion followed Killian's recent talk, which was instrumental in casting a spotlight on pertinent AI topics among community members. The recording can be found here for further review.

LLM Finetuning Discussions

Offload Optimizer State to Avoid OOM:

Users discussed strategies to offload optimizer state to CPU or CUDA managed memory to prevent Out of Memory (OOM) errors in model training. They emphasized the trade-off in performance unpredictability and the importance of fused optimizers to speed up optimizer.step operations.

Shared Insights on Efficient Deep Learning Techniques:

Several users exchanged insights on advanced optimization techniques like bnb 8bit casting and LoRA. They explored how these techniques save memory and enhance model performance during training.

Extensive Resource Sharing:

Members shared numerous resources on model training optimization, including links to Profetto UI, torch profiler, and various GitHub repositories. Specific URLs included a YouTube video on 8-bit Deep Learning and a Google Drive with related slides and traces.

Enthusiastic Discussion on Model Quantization and FSDP:

Users actively discussed the benefits and complexities of quantization, especially with tools like FSDP2, emphasizing efficient memory management. The conversation highlighted the practical implementations and challenges of working with NF4 tensors and large model training.

Interactive and Appreciative Community Engagement:

The chat was filled with supportive interactions, humor, and praise, particularly for the informative talks and materials shared by specific members. The community expressed gratitude for detailed presentations, with one member humorously adding, "Memes are the best method of information dissemination to a crowd like us."

LLM Finetuning (Hamel + Dan) ▷ #fireworks

Users on the 'fireworks' channel are requesting credits to be added to their accounts, including member account IDs like i-00dda2, dimitry-611a0a, and tanmaygupta9-70b723. This section focuses on resolving the credit issue for several members in the community.

Unsloth AI Discussions

- Expect Multigpu Support in Early July 2024: Members eagerly anticipate the release of multigpu, with a tentative date set for early July 2024. One member humorously mentioned, '2025 but no seriously, early July, 2024.'

- LORA Inference Interface and vLLM: There's an interest in an inference interface that allows enabling/disabling LORA during inference. A user discovered that vLLM supports this feature and contemplates if it could work with exl2 or TabbyAPI.

- Overfitting Issues in Training: A member is experiencing overfitting in model training, resulting in lower performance on simpler tasks. Suggestions included trying data augmentation, leveraging weight decay, and ensuring diverse and comprehensive training data.

- Fine-Tuning and EOS Token Discussions: Members discussed the importance of EOS tokens while training instruct models on general texts. One suggested using

BOS_token + entire text + EOS_tokenfor continuous pre-training. - Hugging Face AutoTrain Adds Unsloth Support: Hugging Face AutoTrain now supports Unsloth, enabling users to fine-tune LLMs more efficiently. The new feature was met with excitement and appreciation.

Challenges with Deep Model Integration and Influence Functions

The section discusses the challenges standard Transformers face when learning tasks without supervised scratchpads. It highlights the inefficiencies of unsupervised scratchpads in gradient descent for complex token interactions. Additionally, the utility and limitations of influence functions are explored, with a reference to Koh and Liang's paper. Practical applicability and approximations are emphasized. Further, the section mentions links to papers introducing advancements like VALL-E 2 in neural codec language models, Autoregressive Model for scalable image generation, and replacing dense layers with structured matrices for more compute efficiency.

Perplexity AI - LM Studio Discussions

The discussions in this section cover various topics related to LM Studio in the Perplexity AI server. Members seek tools for parsing PDFs locally, discuss issues with WebUI and LMStudio, express concerns over SB 1047 impacting open-source AI, and debate about adapters for RAM and GPU upgrades to run larger AI models efficiently. GPU recommendations are shared, with suggestions ranging from older server cards for budget builds to more powerful GPUs with at least 24GB VRAM for improved performance.

HuggingFace LM Studio Models and Discussion

Boptruth-NeuralMonarch-7B debuts:

A member shared their successful merge of Boptruth-NeuralMonarch-7B using LazyMergekit, best for Alpaca chat template. Model available on Huggingface.

Taming the Qwen2 72B Model:

Dolphin 2.9.2 Qwen2 72B Q8 runs on 128MB M3 Max, performs well.

Llama3 Fine Tune Impresses:

Testing Llama3-FiditeNemini-70B-Source.i1-Q6_K.gguf gives clever writing results. Superior to base Llama3 model.

Prompt Issues Questioned:

Llama3 fine-tune queried for random shouting issue; tester finds it satisfactory for role-play.

P40 Temperature and Cooling Discussions:

Discussions on P40 card cooling solutions, thermal-throttling at 90C. Links shared for Aliexpress fans.

8700g Performance Intrigue:

8700g tested for 11 toks in lm studio, affordable choice for large models.

LM Studio Multi-GPU Performance Concerns:

LM Studio criticized for poor multi-GPU handling; PCIe throughput bottleneck noted.

Tesla V100 Compatibility Inquiry:

User inquires about running LM Studio with Tesla V100; compatibility concerns raised.

OS Preferences for LM Studio:

Debate on LMStudio performance in Windows vs. Linux; Windows favored for ease of use, with Linux requiring specific setups and in beta.

HuggingFace Announcements and Discussions

HuggingFace Discussion Highlights:

- Shared updates on various HuggingFace spaces, including anime fine-tuning, chat models, and lap time prediction projects

- Introduced the CAMB AI MARS5 TTS model and Dalle 3 image captions dataset

CVPR 2024 and NLP Discussions:

- Discussed CVPR 2024 paper summaries app and Label Studio ML backend

- Shared insights on TensorFlow model compatibility and MaPO text-to-image alignment technique

Nous Research AI Announcements:

- Released the Character Codex dataset

General Tech Discussions:

- Presented various discussions on mutual information, RLHF creativity impact, and model quantization

- Announced Rig open-source library and discussed minimizing LLM models

Cohere & Latent Space AI Tidbits:

- Introduced Cohere’s Apple Intelligence integration and Developer Office Hours

- Highlights from the Apple vs. OpenAI partnership integrating ChatGPT into Apple’s ecosystem

Interconnects (Nathan Lambert) - News

Members discuss mixed reactions to the Apple Intelligence announcement, expressing skepticism about OpenAI integration and privacy concerns. Apple's differentiation between Apple Intelligence and Siri is noted, possibly for strategic reasons to shape user perception.

OpenAccess AI Collective - General Help

Members of the OpenAccess AI Collective discussed the impressive Rakuten models specializing in Japanese language processing. There was amusement and surprise at the model's capabilities, with humorous reactions to its responses. The community acknowledged the value of quality large language models tailored for specific languages, with a nod to Mistral-7B models. The discussion highlighted the superior performance of these models and the potential for commercial use.

llamafile Conversation

llamafile (1 messages):

- jartine: is that a grammar thing?

FAQ

Q: What AI-related features did Apple integrate into iOS, iPadOS, and macOS with the help of OpenAI's ChatGPT?

A: Apple integrated AI-powered features like document summarization and photo analysis into their devices.

Q: What were the mixed reactions to Apple's AI integration and how did it compare to other models like GPT-4?

A: Some questioned Apple's capability to ship AI independently. Apple's on-device models were noted to perform well, but server-side models lagged behind GPT-4.

Q: What are some of the topics discussed in the AI Discord Recap?

A: Discussions covered anticipated enhancements in AI models, model compression and optimization strategies, benchmarks for AWS instances, stable diffusion models, AI challenges, model deployment, real-world ML applications, innovative AI tools, AI expectations, and capabilities.

Q: What potential impacts on user adoption and perceptions were discussed regarding Apple's separation of Apple Intelligence from Siri?

A: There were dialogues on the potential impacts of user adoption and perceptions of the new system's capabilities resulting from Apple's strategic separation of Apple Intelligence from Siri.

Q: What criticized bill related to AI control and centralization was discussed in the content?

A: Dan Jeffries criticized the California AI Control and Centralization Bill (SB 1047) for its centralized control over AI and the threat it poses to open-source AI innovation.

Q: What advancements were shared regarding enhancing Open Interpreter with a new prompting system?

A: Work was shared on enhancing Open Interpreter with a skilled prompting system that can store, search, and retrieve information like a personal assistant.

Q: What were the insights shared on efficient deep learning techniques?

A: Insights were shared on advanced optimization techniques like bnb 8bit casting, LoRA, offloading optimizer state to prevent OOM errors, and the benefits and complexities of quantization.

Q: What were some discussed issues related to models like LLA3, Qwen2, and P40 in the content?

A: Discussions included issues and performance results of models like Llam3, Qwen2, and P40, ranging from fine-tuning impressiveness to cooling solutions and multi-GPU handling concerns.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!