[AINews] Gemma 2: The Open Model for Everyone • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Discord Recap

Various Discord Summaries

Discord Community Highlights

Discussion on Gemma 2 Innovations and Traditional vs. Modern Distillation Methods

AI Tools and Flight Tracking Apps

Models and AI Performance Discussions

World Simulation Discussion

Latent Space - General Chat

Latent Space Discussion and Updates

Interconnects (Nathan Lambert) Memes

Interconnects Clarification on Bases Terminology

Discussion on Various AI Topics

AI Twitter Recap

The section provides a recap of AI-related discussions on Twitter, highlighting various updates and releases in the AI models and architectures space, as well as tools, frameworks, and platforms. The recap includes insights on new Open LLM Leaderboard, Alibaba's Qwen models performance, and Anthropic's release of Claude 3.5 Sonnet. It also covers advancements such as eliminating matrix multiplication in LLMs, NV-Embed techniques, LangChain's LangSmith feature, and Mozilla's new AI offerings. Additionally, it mentions contests like Anthropic's Build with Claude and Meta's Llama Impact Innovation Awards.

AI Discord Recap

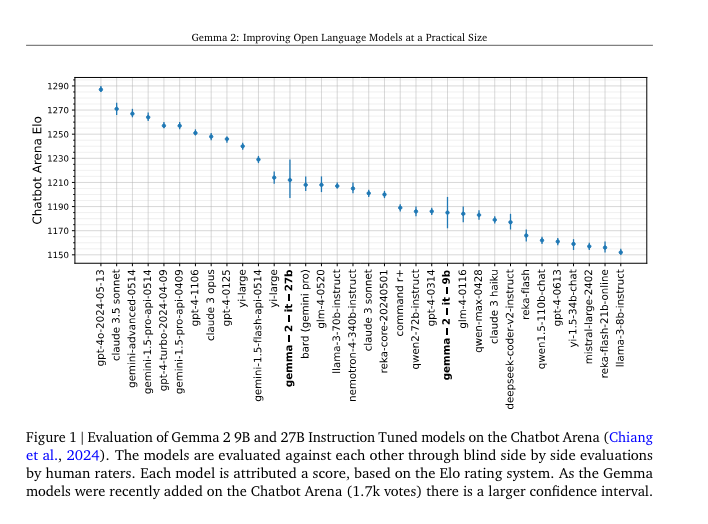

A variety of topics were discussed in the AI Discord community, including the release of Gemma 2 models by Google on Kaggle, the introduction of LLM Compiler models by Meta for code optimization, surprising high rankings of lesser-known models like Yi on the Open LLM Leaderboard, and the debut of new AI tools like llama-agents for deploying multi-agent AI systems. Discussions also revolved around benchmark analyses, ethical considerations in AI training, advancements in AI licensing models, and innovations such as the SPARSEK Attention mechanism for efficient long-sequence processing.

Various Discord Summaries

Discussions on different Discord channels such as Unsloth AI, HuggingFace, OpenAI, CUDA Mode, Eleuther, LAION, Nous Research AI, Stability.ai, Modular Mojo, Perplexity AI, Latent Space, LM Studio, and LangChain AI cover a wide range of topics related to AI advancements, challenges, and community interactions. Users engage in conversations about model benchmarks, challenges in AI deployment, hardware requirements, model optimizations, API usage, specialized chips, and ethical considerations. Discord communities exchange insights on AI-related tools, new techniques, and upcoming developments, fostering collaborative learning and exploration in the AI space.

Discord Community Highlights

- LlamaIndex's New AI Warriors: LlamaIndex introduced llama-agents, a multi-agent AI framework, and initiated sign-ups for its LlamaCloud service.

- JsonGate at LlamaIndex: Engineers debated the exclusion of JSONReader, leading to a pull request for its inclusion.

- When AIs Imagine Too Much: LlamaParse faced scrutiny for hallucinating data, seeking document submissions for debugging.

- BM25's Re-indexing Dilemma: Issues with BM25 algorithm re-indexing efficiency were discussed, suggesting sparse embedding alternatives.

- Ingestion Pipeline Slowdown: Performance degradation in LlamaIndex's pipelines prompted proposals for batch node deletions.

- API Revenue Surpasses Azure Sales: OpenAI's API revenue surpassed that of Microsoft's Azure resales, marking a significant market shift.

- Meta's New Compiler Tool: Meta unveiled the Meta Large Language Model Compiler, focusing on compiler optimization capabilities.

- Character Calls - The AI Phone Feature: Character.AI introduced Character Calls for voice interactions, eliciting mixed feedback.

- The Coding Interview Dilemma: Engineers discussed challenging interview questions and unclear expectations, mentioning advanced voice features in ChatGPT.

- Patent Discourse - Innovation or Inhibition?: Community debated patented technologies, including Google's transformer architecture patent.

- Stheno 8B Grabs the Spotlight: OpenRouter launched Stheno 8B by Sao10k, offering new creative writing capabilities.

- Technical Troubles with NVIDIA Nemotron Selection: Users reported varying experiences with NVIDIA Nemotron selection across devices.

- API Key Compatibility Query and Uncensored AI Models Discussed: Compatibility of OpenRouter API keys and discussions on uncensored AI models alternatives were topics of conversation.

- Google Gemini API Empowers with 2M Token Window: Google's Gemini 1.5 Pro update offering a 2M token window and code execution capabilities was welcomed by developers.

- Seeking Anthropic's Artifacts Parallel in OpenRouter: Discussion around the potential for Sonnet-3.5 to generate code similar to Anthropic's Artifacts.

- Innovative API Strategies: The Cohere API allowed non-commercial usage by OpenRouter without license agreement breaches.

- Command-R Model Sparks Exclusivity Buzz: Command-R model's exclusivity through OpenRouter intrigued discussions on licensing and accessibility.

- Licensing Pitfalls Narrowly Avoided: Debate on potential misuse of Command-R's licensing by SpicyChat was resolved through payments to Cohere.

- Technical Troubleshooting Triumph: Successful resolution of Cohere API script errors was shared, following official multi-step tool documentation.

- Rust Library Unveiled with Rewards Program: Introduction of Rig, a Rust library for LLM-powered applications, with a feedback program rewarding developers.

- Decoding the Neural Networks: A study group in SF focused on neural networks based on Andrej Karpathy's series.

- Open-Source Models Attract Interpreter Enthusiasts: Discussions on best open-source models for local deployment, particularly GPT-4o.

- GitHub Policy Compliance Dialogue: Concerns about projects conflicting with GitHub's policies were addressed.

- Meta Charges Ahead with LLM Compiler: Meta's LLM Compiler development for code optimization and disassembling was highlighted.

- Changing Tides for O1: Updates on O1 release changes and discussions on available models for different languages.

- Debugging Beware: NCCL Watchdog Meets CUDA Error: Encounter of a CUDA error involving NCCL watchdog thread termination was discussed.

- Gemma2 Garners Goggles, Google Greatness: Evaluation of Google's Gemma 2 for AI tasks and comparison with other models.

- Meta Declares LLM Compiler: Meta's announcement of LLM Compiler models for code optimization sparked interest.

- Gemma2 vs Transformers: Round 1 Fight: Technical issues with Transformers code affecting Gemma 2 raised, awaiting fixes.

- Repeat After Me, Mistral7B: Reported operational quirk with Mistral7B looping during instruction-tuning, puzzling due to training dataset disparities.

- PyTorch's Rise Captured on Film: Sharing of an official PyTorch Documentary chronicling its development and success.

- Generic FPGA Design for Transformers: A guild member explained FPGA design adaptability for loading any Transformer model.

- Iterative Improvement on Tinygrad: Progress on integrating SDXL with tinygrad and plans for performance improvements.

- Hotz Hits the Presentation Circuit: George Hotz scheduled for an eight-minute presentation.

- Tinygrad Call for Code Optimizers: Announcement of a cash incentive for speeding up the matching engine's efficiency.

- Deep Dive into Tinygrad's Internals: Technical discussions on porting PyTorch's MultiheadAttention to tinygrad and estimating VRAM requirements.

- Anthropic Announces Build-With-Claude Contest: Contest focusing on building applications with Claude highlighted.

- LLM Cover Letter Creation Queries: Discussions on fine-tuning language models for generating cover letters.

- Social Media Style Mimicry via LLM: Creation of a bot for social media interactions using Flask and Tweepy, seeking training guidance.

- Cursor Gains Ground Among Students: Debates on using OpenAI's Cursor versus Copilot and integration possibilities.

- Credit Allocation and Collaborative Assistance: Requests for assistance and updates on credit allocation dynamics within the community.

Discussion on Gemma 2 Innovations and Traditional vs. Modern Distillation Methods

The Discord chat featured discussions on various topics related to AI, including critical opinions and praises for ChatGPT 3.5, hardware issues with AI systems, and the innovative features of Google's Gemma 2 release. Users also debated the use of traditional Knowledge Distillation (KD) versus modern distillation methods in Gemma 2, with some users highlighting the benefits of modern approaches and expressing excitement over the innovations in Gemma 2 such as prepost ln and logit softcap. Links were shared regarding Gemma 2 and other related resources.

AI Tools and Flight Tracking Apps

Astrabert introduced BLAST-SummarAIzer to simplify interpreting BLAST search results for researchers. Deuz_ai_80619 shared Flight-Radar, a real-time flight tracking app with geolocation and flight data download features. The HuggingFace reading group discussed concerns about saturation, OpenAI introduced CriticGPT for bug detection, and the OpenAI discussions covered topics like coding performance of models and AI limitations. CUDA Mode discussions included debates about tensor cores, PyTorch features, and Triton pow functions.

Models and AI Performance Discussions

- Discussions around issues impacting model performance and the introduction of Gemma 2 AI model by Google, highlighting its notable features and performance advantages.

- Members shared views on Async Checkpointing PR Review, indicating concerns about added complexity and memory allocation impacts.

- Excitement surrounded Gemma 2's release with praise for beating larger models and innovative training techniques.

- Further discussions on sparsity accelerating neural network training and checkpoints for optimizer state and model parameters.

- Insights shared on advancements in optimizers like Adam-mini and debates on optimal layer ordering in transformers.

- Additional talks on neurons and weight permutations, SPARSEK attention mechanism, manifold hypothesis testing, ethical considerations in model training, and the role of NSFW data in AI models.

World Simulation Discussion

The World Simulation channel featured a user sharing a series of spooky gif links, including themes like Scary Movie Matrix Chair, Halloween Ghost, Doctor Strange, and The Black Hole. Another interaction involved a member greeting the group and receiving a reply. The conversation in the channel ranged from sharing gif links to casual greetings and exchanges between members.

Latent Space - General Chat

Figma AI is free for a year

According to @AustinTByrd, "Figma AI is free for a year before they start billing everyone." Follow the link for full details: @AustinTByrd Config2024 thread.

Conference talks now available via livestream

Recordings of non-livestreamed tracks like the RAG talks are still being awaited. Meanwhile, members can watch select livestreams on the AI Engineer YouTube channel.

Compass transcript site shared

Compass transcript site was shared for viewing conference transcripts. These resources were mentioned to be useful and solid.

LangGraph Cloud launches

@LangChainAI launched LangGraph Cloud, offering scalable infrastructure for fault-tolerant agents and integrated tracing & monitoring. However, some members questioned the necessity for specialized infrastructure for state machines.

Lots of wearable tech emerging

Discussions included new wearables like Bee.computer and their features like recording, transcription, and task execution. The service even offers an Apple Watch app, making extra devices optional.

Latent Space Discussion and Updates

LLM Paper Club West (70 messages🔥🔥):

- Members discuss information processing amid AGI, highlighting the importance of multitasking for future AI systems.

- Technical difficulties during event presentation were reported with suggestions for improvement.

- Planning and coordination for AI Engineer World Fair were discussed, emphasizing the logistics and coordination efforts.

- Recap requests for AI Engineer Conference were made, noting the challenge of managing multiple events simultaneously.

- Managing event resources and logistics was a key topic, focusing on ensuring a seamless experience for presenters and guests.

Links mentioned:

- AI Engineer: Talks, workshops, events, and training for AI Engineers.

LM Studio Models Discussion Chat (23 messages🔥):

- Discussions revolved around model performance on different setups, such as DeepCoder V2 on Mac Studio and memory constraints.

- Gemma 2's release received mixed reviews due to its limited context window.

- Meta introduced new LLM Compiler models focusing on code optimization and compiler capabilities.

Links mentioned:

- Google | Gemma 2 | Kaggle: Lightweight open models from Google.

- Open LLM Leaderboard 2 - a Hugging Face Space by open-llm-leaderboard: Leaderboard for LLM models.

- Tweet from AI at Meta (@AIatMeta): Announcement of Meta LLM Compiler models.

- Reddit - Dive into anything: Discussion thread on Gemma 2 release.

Interconnects (Nathan Lambert) Memes

In this section, members engage in various fun conversations and activities, such as guessing ML figures by their shoes, discussing the founders of Cohere, analyzing interview dynamics, and sharing playful links and memes. The discussions range from dissecting YouTube interviews to theorizing about the meaning behind emoji use in threads. Links to videos, tweets, and humorous content are exchanged, adding a lighthearted tone to the interactions.

Interconnects Clarification on Bases Terminology

A member inquired about the term 'bases' in a recent synthetic data article, and another member clarified that it referred to base models.

Discussion on Various AI Topics

In this section, discussions from different channels related to AI topics are highlighted. From porting pytorch's MultiheadAttention to tinygrad to estimating VRAM for model training, users engaged in diverse conversations. Additionally, there were inquiries on fine-tuning LLM models for specific tasks like cover letter generation and building tweet-based bots. The section also mentions mod activities to prevent spam, Gemma 2 support queries, and sharing of training codes.

FAQ

Q: What are some of the recent updates and releases in the AI models and architectures space discussed in the AI-related discussions on Twitter?

A: Recent updates and releases discussed include the new Open LLM Leaderboard, Alibaba's Qwen models performance, and Anthropic's release of Claude 3.5 Sonnet. Advancements such as eliminating matrix multiplication in LLMs, NV-Embed techniques, LangChain's LangSmith feature, and Mozilla's new AI offerings were also mentioned.

Q: What were some of the topics discussed in the AI Discord community related to AI advancements, challenges, and community interactions?

A: Discussions in the AI Discord community covered topics such as model benchmarks, challenges in AI deployment, hardware requirements, model optimizations, API usage, specialized chips, ethical considerations, new techniques, and upcoming developments in the AI space.

Q: What are some of the key highlights from the discussions on Gemma 2 AI model by Google on Kaggle?

A: The discussions on Gemma 2 AI model included debates on hardware issues with AI systems, traditional Knowledge Distillation (KD) versus modern distillation methods, benefits of modern approaches, and innovations in Gemma 2 such as prepost ln and logit softcap.

Q: What were some of the major announcements and discussions related to Meta's LLM Compiler models for code optimization and compiler capabilities?

A: Discussions highlighted Meta's new LLM Compiler models focusing on code optimization and compiler capabilities, along with the comparison with Gemma 2's release and technical issues affecting Gemma 2.

Q: What were some of the innovative AI tools and frameworks introduced in the discussions, such as llama-agents for deploying multi-agent AI systems?

A: Innovative AI tools and frameworks discussed include llama-agents for deploying multi-agent AI systems, Gemma 2 AI model by Google on Kaggle, Async Checkpointing PR Review, Adam-mini optimizers, and insights on sparsity accelerating neural network training.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!