[AINews] Inflection-2.5 at 94% of GPT4, and Pi at 6m MAU • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Summary of High Level Discord Summaries

HuggingFace Discord Summary

Nous Research AI Genstruct 7B Model Announcement

Training Techniques and Model Development

OpenAI Discord Channel Discussions

Latent Space Discussions

Eleuther Community Updates

HuggingFace Community Interactions

HuggingFace Diffusion Discussions

Q&A and Discussions on Different Topics

CUDA Mode Updates

Alignment Lab AI

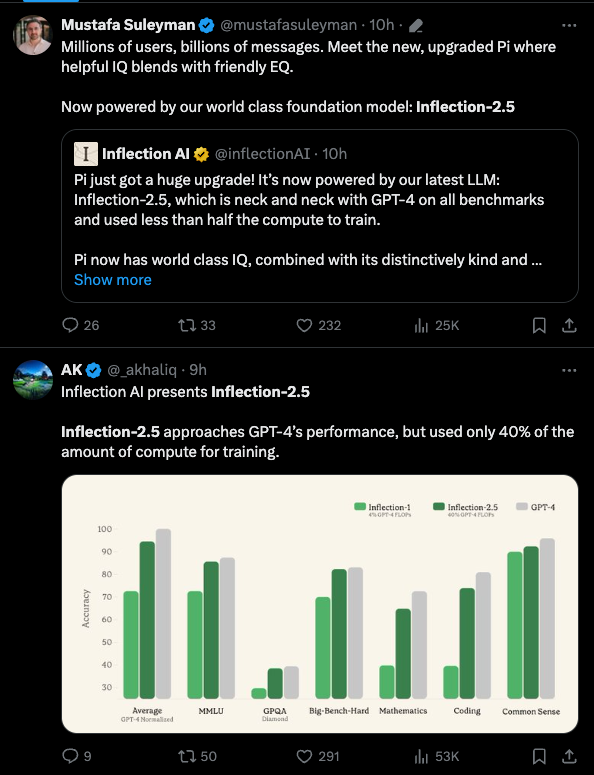

AI Twitter Recap

This section provides a recap of various tweets related to AI developments and events in the industry. It covers a range of topics including the release of Claude 3, retrieval augmented generation (RAG) capabilities, benchmarking and evaluation insights, AI research and techniques, as well as memes and humor circulating in the AI community.

Summary of High Level Discord Summaries

The Discord summaries discussed various topics including new AI models and tools, hardware optimization, AI game development, and debates around model optimization and pruning. Highlights include the release of innovative AI applications, community dynamics around AI projects, and the emergence of new models like Genstruct 7B and Yi-9B. Additionally, hardware configurations, API integration, and discussions on the efficiency of AI models like Claude-3 and Mistral were major talking points.

HuggingFace Discord Summary

New Features and Updates

- Entrepreneurs Seek Open Source Model Groups: Entrepreneurs on HuggingFace are looking for a community to discuss the application of open-source models in small businesses. No dedicated channel or space was recommended within the provided messages.

- New Model on the Block, Yi-9B: Tonic_1 launched Yi-9B, a new model in the Hugging Face collection, available for use with a demo. Hugging Face may soon be hosting leaderboards and gaming competitions.

- Inquiry into MMLU Dataset Structure: Privetin displayed interest in understanding the MMLU datasets, a conversation that went without elaboration or engagement from others.

- Rust Programming Welcomes Enthusiasts: Manel_aloui kicked off their journey with the Rust language and encouraged others to participate, fostering a small community of learners within the channel.

- AI's Mainstream Moment in 2022: Highlighted by an Investopedia article shared by @vardhan0280, AI's mainstream surge in 2022 was attributed to the popularity of DALL-E and ChatGPT.

- New Features in Gradio 4.20.0 Update: Gradio announced version 4.20.0, now supporting external authentication providers like HF OAuth and Google OAuth, as well as introducing a delete_cache parameter and /logout feature to enhance the user experience. The new gr.DownloadButton component was also introduced for stylish downloads, detailed in the documentation.

Nous Research AI Genstruct 7B Model Announcement

Nous Research announces the release of the Genstruct 7B model, an instruction-generation model inspired by the Ada-Instruct paper. This model can create valid instructions from raw text, enabling the development of new finetuning datasets. It is designed to generate questions for complex scenarios, fostering detailed reasoning.

Training Techniques and Model Development

User-Informed Generative Training:

- The Genstruct 7B model is focused on user-provided context, building on ideas from Ada-Instruct to enhance reasoning capabilities. Available for download on Hugging Face: Genstruct 7B on HuggingFace.

Led by a Visionary:

- Genstruct 7B development was led by

<@811403041612759080>at Nous Research, emphasizing innovation in instruction-based model training.

OpenAI Discord Channel Discussions

This section provides insights into various discussions held on OpenAI's Discord channels. Users shared information on navigating channel posting requirements, improving problem-solving with GPT-5, enhancing storytelling randomization, seeking help for GPT classifier development, and more. The content touches on topics like positivity in role-play prompts, vision models for complex tasks, and the potential of GPT-5. Additionally, hardware discussions, LM Studio capabilities, and alternative AI services are explored. The interactions highlight a collaborative environment with users sharing knowledge and support to optimize their AI-related endeavors.

Latent Space Discussions

LlamaIndex

- Users discussed LlamaIndex usage, including customization, document ingestion, vector store recommendations, and discrepancies in query responses between LLM and VectorStoreIndex. Recommendations were given for tools like Qdrant, ChromaDB, and Postgres/pgvector.

Enhancing In-context Learning

- A user shared work on Few-Shot Linear Probe Calibration for in-context learning and sought support by starring the GitHub repository.

General Chat

- Conversations ranged from tea time tales from Twitter to Discord drama involving Stability AI and Midjourney.

- Users also joked about a newsletter's expansion and shared GIFs in response to the drama.

Announcements

- New podcast episodes featuring various guests were announced, along with discussions hitting Hacker News.

- Members were invited to join presentations on model serving survey papers in the Discord channel.

LLM Paper Club

- Discussions delved into performance differences, inference optimization, distributed training, and model serving survey presentations. Links to related resources and papers were shared for further exploration.

Eleuther Community Updates

Eleuther announces new benchmarks for Korean language model evaluation and calls for contributions to multilingual benchmarks. Discussions in the research channel cover topics like the Pythia model suite, adaptive recurrent vision, batch normalization vs. token normalization debate, innovative memory reduction strategies, and optimizer hook insights with PyTorch. In the lm-thunderdome channel, conversations revolve around customizing model outputs, MCQA evaluation, consistency in fine-tuning, evaluating with generation vs. loglikelihood, and discussions on multilingual evaluation criteria. The gpt-neox-dev channel discusses issues with optimizer memory peaks, dependency conflicts, using Docker images for dependency management, deploying Poetry for package management, and challenges with fused backward/optimizer implementations.

HuggingFace Community Interactions

This section showcases various interactions and inquiries within the HuggingFace community. Topics range from entrepreneurial inquiries to model training queries, showcasing personal projects, discussing multimodal models, seeking guidance on inference APIs, and more. Users also share links to resources related to AI developments, course offerings, code repositories, and projects. The community engages in discussions on Rust programming, model training achievements, educational datasets, new model releases, chatbot development, and the coordination of reading group sessions. Peer learning opportunities, advice on fine-tuning and deployment, and the sharing of comprehensive exploration resources related to Big Data Technology Ecosystem are highlighted.

HuggingFace Diffusion Discussions

HuggingFace Diffusion Discussions

<ul> <li><strong>Slow Down, Power User!</strong>: HuggingMod gently reminded @user to temper their enthusiasm and reduce the frequency of their messages with a friendly nudge to slow down a bit 🤗.</li> <li><strong>Quest for SDXL-Lightning LoRA Knowledge</strong>: @happy.j asked for assistance on how to integrate SDXL-Lightning LoRA with a standard sdxl model, looking for answers on fine-tuning or creating their version. Recommendations included training a regular SDXL model and applying LoRA for acceleration or merging SDXL-Lightning LoRA before further training, with advanced techniques like MSE loss and adversarial objectives.</li> <li><strong>Tips from ByteDance for SDXL Magic</strong>: ByteDance suggested starting with a traditional SDXL model and adding LoRA for acceleration, merging SDXL-Lightning LoRA with a trained SDXL model, and using advanced techniques such as merging and using adversarial objectives for those wanting a challenge.</li> </ul> <p><strong>Links mentioned</strong>:</p> <p><a href="https://huggingface.co/ByteDance/SDXL-Lightning/discussions/11?utm_source=ainews&utm_medium=email&utm_campaign=ainews-inflection-25-at-94-of-gpt4-and-pi-at-6m#65de29cdcb298523e70d5104" target="_blank">ByteDance/SDXL-Lightning · finetune</a>: no description found</p>Q&A and Discussions on Different Topics

In this section, various discussions and Q&A sessions were documented. Topics covered include handling Python package dependency conflicts, manual installation tactics, masking mechanisms during training, group chatting experiences, moderation layers on models, handling of CSV loader timeout errors, phishing attempts on Discord, query scaling with large datasets, lack of documentation on Azure AI Search integration, user profile construction using Pydantic and LangChain, system prompts for profile creation, issues with chat chain construction, and community members sharing their work like building RAG from scratch, releasing ChromaDB Plugin, exploring architectural and medical generative scripts, introducing the ask-llm library for LLM integration in Python projects, and announcing workshops on vision models in production. Additionally, diverse projects like crafting an infinite craft game, making memes with Mistral and Giphy, Flash Attention project in CUDA, and educational resources on Nvidia CUDA were highlighted.

CUDA Mode Updates

The CUDA Mode section provides valuable insights into various discussions related to CUDA programming and technologies. One discussion highlights bandwidth revelations with Nvidia's H100 GPU and architectural comparisons between different Nvidia models. Another discussion focuses on handling coarsening effects on performance while sharing insights on CUDA CuTe DSL and dequantization optimization in CUDA programming. Additionally, the section covers topics like torch messages, algorithms, ring-attention, and off-topic discussions related to CUDA programming and technologies. These discussions offer a deep dive into specific CUDA-related topics, exchanges of ideas, and sharing of relevant links for further exploration.

Alignment Lab AI

This section discusses various interactions and discussions within the Alignment Lab AI channel. It includes welcoming new members, brainstorming sessions for Orca-2 project, data augmentation strategies, and humorous remarks about AI model choices. Additionally, there are conversations about language model recommendations for German-speaking models and a positive experience shared about the Hermes Mixtral model.

FAQ

Q: What is the new model Yi-9B launched on Hugging Face?

A: Tonic_1 launched Yi-9B, a new model in the Hugging Face collection.

Q: What features were introduced in the Gradio 4.20.0 update?

A: Gradio announced version 4.20.0, introducing features like supporting external authentication providers, a delete_cache parameter, a /logout feature, and the new gr.DownloadButton component.

Q: What is the focus of the Genstruct 7B model released by Nous Research?

A: The Genstruct 7B model focuses on user-provided context, building on ideas from Ada-Instruct to enhance reasoning capabilities.

Q: What were the highlighted topics of discussions in the OpenAI Discord channels?

A: Users shared information on navigating posting requirements, improving problem-solving with GPT-5, enhancing storytelling randomization, seeking help for GPT classifier development, positivity in role-play prompts, vision models for complex tasks, and more.

Q: What were some of the discussions in the LlamaIndex section?

A: Discussions in LlamaIndex covered topics like customization, document ingestion, vector store recommendations, and discrepancies in query responses between LLM and VectorStoreIndex.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!